CMOS: advanced and beyond

Discover why imec is the premier R&D center for advanced logic & memory devices.

Integrated photonics

Take a look at technologies for optical transceivers, sensors and more.

Health technologies

See how imec brings the power of chip technology to the world of healthcare.

Sensing and actuation

Explore imec’s CMOS- and photonics-based sensing and actuation systems.

Connectivity technology

Look into our reliable, high-performance, low-power network technologies.

More expertises

Discover all our expertises.

Research

Be the first to reap the benefits of imec’s research by joining one of our programs or starting an exclusive bilateral collaboration.

Manufacturing

Go from idea to scalable and reliable semiconductor manufacturing with IC-Link.

Venturing and startups

Kick-start your business. Launch or expand your tech company by drawing on the funds and knowhow of imec’s ecosystem of tailored venturing support.

Education and workforce development

Imec supports formal and on-the-job training for a range of careers in semiconductors.

NanoIC pilot line

Aligned with the EU Chips Act, access to the pilot line will accelerate beyond-2nm innovation.

Data Authenticity at the Edge in the Age of Generative AI

PhD - Gent | More than two weeks ago

How can we leverage data authenticity mechanisms on chip for sharing trustworthy derived insights?

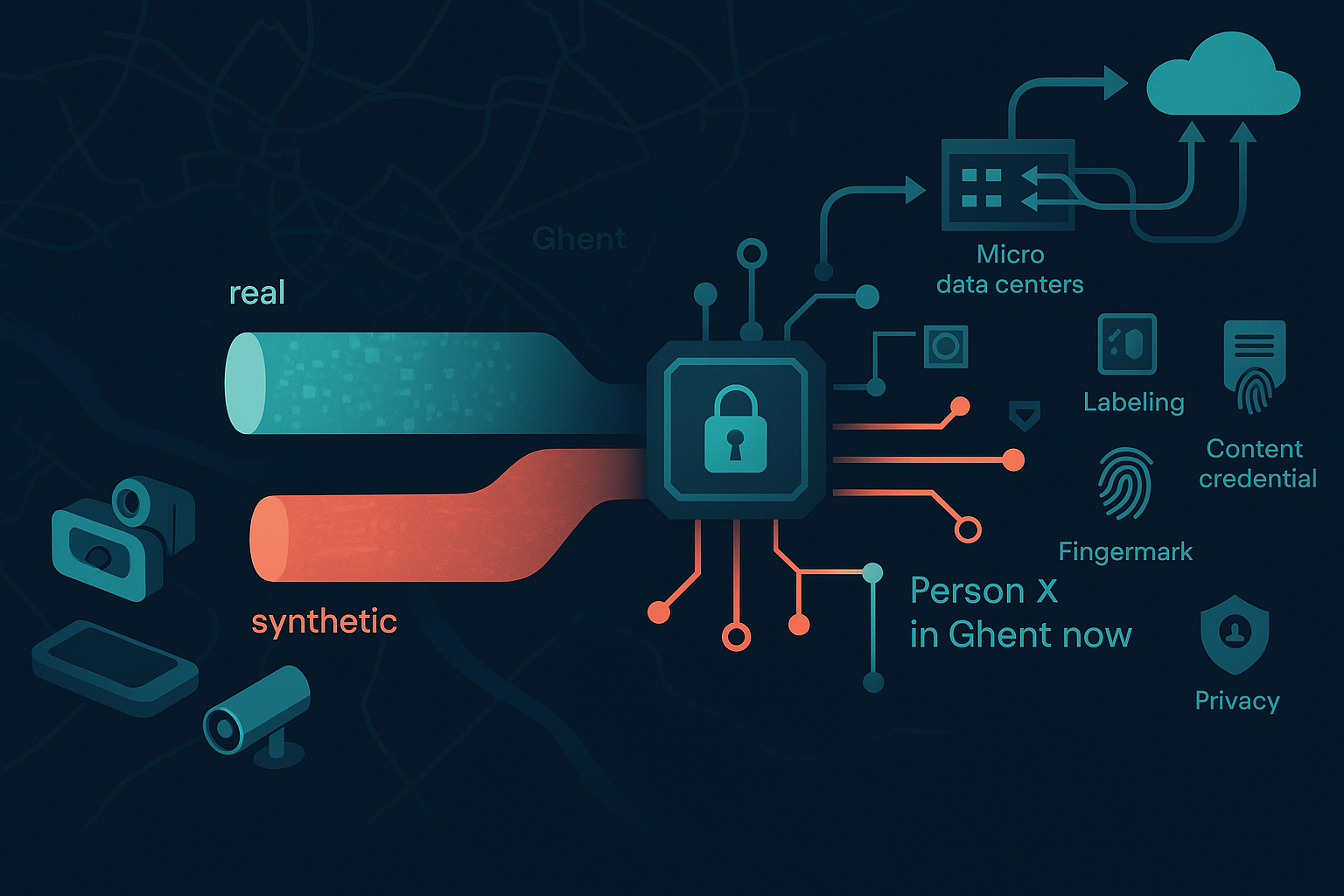

We invite applications for a PhD investigating how to guarantee the authenticity and provenance of sensor data produced at the network edge in an era where generative AI can fabricate highly convincing signals. Edge devices (smartphones, cameras, IoT sensors) initiate a data point’s lifecycle under tight resource constraints and heightened exposure to tampering, making early verification and end-to-end traceability critical. This project will design and evaluate methods that (i) harden the sensing pipeline so software can verify the trustworthiness of raw sensor outputs, (ii) construct provenance chains that preserve verifiable authenticity as data are transformed into higher-level statements (e.g., turning GPS readings into “Person X is in Ghent now”), and (iii) implement these mechanisms efficiently on resource-constrained edge platforms. Outcomes will include a state-of-the-art review; a robust framework for labeling, fingerprinting, and watermarking sensor streams; and prototype implementations validated on real and synthetic datasets across representative edge hardware. The research will advance scientific understanding at the intersection of edge computing, generative AI, and data governance, and aims to inform interoperable practices aligned with emerging content provenance approaches. The project is supervised by Prof. Pieter Colpaert and Dr. Tanguy Coenen in collaboration with imec’s AI & Algorithms research groups, with results to be disseminated via leading conferences, journals, and demonstrators.

Required background: Information Engineering Technology, Computer Science or equivalent

Type of work: 70% data specification modeling, 30% literature on hardware mechanisms

Supervisor: Pieter Colpaert

Co-supervisor: Tanguy Coenen

Daily advisor: Pieter Colpaert

The reference code for this position is 2026-113. Mention this reference code on your application form.