Power consumption versus performance trade-offs for GPUs and emerging hardware architectures

PhD - Gent | More than two weeks ago

Today’s platforms, ranging from automotive systems, UAVs, AUVs and small satellites to healthcare devices, are increasingly equipped with advanced sensors, generating vast amounts of data in real time. The need for real-time decision-making, combined with strict limitations on storage capacity and communication bandwidth, has made on-board AI processing essential. However, meeting the high computational demands of deep neural networks is severely constrained by the limited power budgets available on these platforms. Particularly for GPUs, the uncompetitive choice for AI acceleration, their on-board applications are further restricted by power constraints due to their substantial energy consumption.

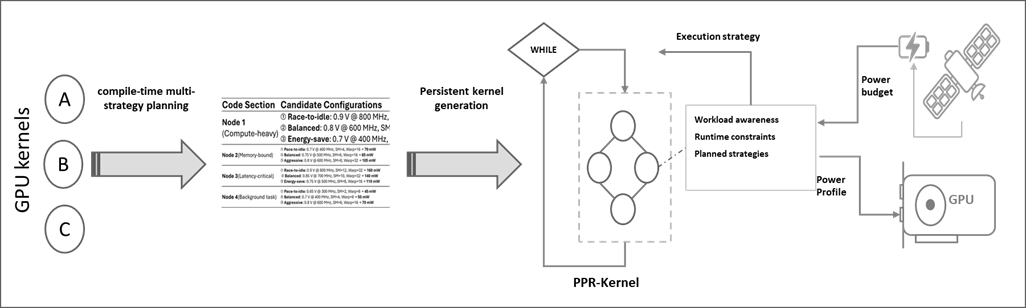

In this PhD, we will adopt an innovative approach to optimize the execution of a computation graph (of e.g., a neural network and/or an image processing algorithm) on hardware accelerator architectures (e.g., GPU), using both state-of-the-art and emerging technology, to trade power versus performance. We focus on (i) power-aware programming approaches to consider the power consumption of algorithms along with performance requirements; (ii) compile-time multi-strategy planning, where code is analyzed to classify execution phases and generate optimized strategies for energy/performance trade-offs; and (iii) run-time optimization, where the run-time system selects the execution strategy and dynamically manages the hardware power profile based on constraints, and input data. The approach will be validated on an edge GPU, but it is designed to be integrated in simulation environments of new 3D architectures at imec. This way, the outcome of the PhD is not only applicable to GPUs but to a wide range of hardware architectures that benefit from a combination of compile-time and dynamic run-time power versus performance trade-offs.

We are looking for a motivated PhD candidate interested in bridging the gap between AI/image processing and hardware acceleration architectures. This research will explore novel techniques for reducing power consumption, combining compile-time hardware-aware mappings and optimizations with run-time dynamic execution strategies. Along the way, the candidate will gain expertise in deep neural network inference, the efficient mapping of AI computations to hardware architectures, and GPU profiling and optimization methods via multi-fidelity simulation and profiling tools (e.g. LLMCompass, Accelergy+Timeloop, or others).

Required background: Master of Science in Computer Science, Master of Science in Electrical Engineering

Type of work: 30% modeling, 30% programming, 30% experimental, 10% literature

Supervisor: Bart Goossens

Co-supervisor: Mohsen Nourazar

Daily advisor: Joshua Klein

The reference code for this position is 2026-076. Mention this reference code on your application form.