Sensor fusion algorithms

With cooperative fusion, imec introduces a method for combining the inputs of various sensors that significantly outperforms the standard algorithms.

Conventionally, there are two ways to approach sensor fusion:

- Early fusion: sensor data is fused directly after it has been captured.

- Late fusion: sensor data is fused after it has been highly processed.

Imec develops algorithms to introduce a new approach called cooperative fusion: sensors negotiate early on to decide what is the relevant information in a scene. That information can then be captured and relayed in more detail.

More efficient decision-making

Central to cooperative fusion is a feedback loop. The different sensors exchange and compare low-level (unprocessed) or middle-level (preprocessed) information. In that way, they influence each other’s detection process to focus on what’s really relevant.

Such cooperative fusion outperforms conventional late fusion by up to 10%. It’s especially effective in difficult scenarios and borderline cases. For instance: when the camera is partially blinded by a strong light, the radar will help it to pinpoint locations where the detection threshold should be lowered so that a faintly visible object will still be detected.

This system of cooperative fusion retains the modularity of late fusion. It therefore allows you to plug in or replace existing black-box systems, such as closed systems provided by sensor vendors or pre-trained neural networks. This means better or newer versions of sensors will immediately result in better fusion, without the need to retrain the AI.

Read this article for an in-depth view on pedestrian detection using radar-video sensor fusion

Algorithms for various sensor fusion use cases

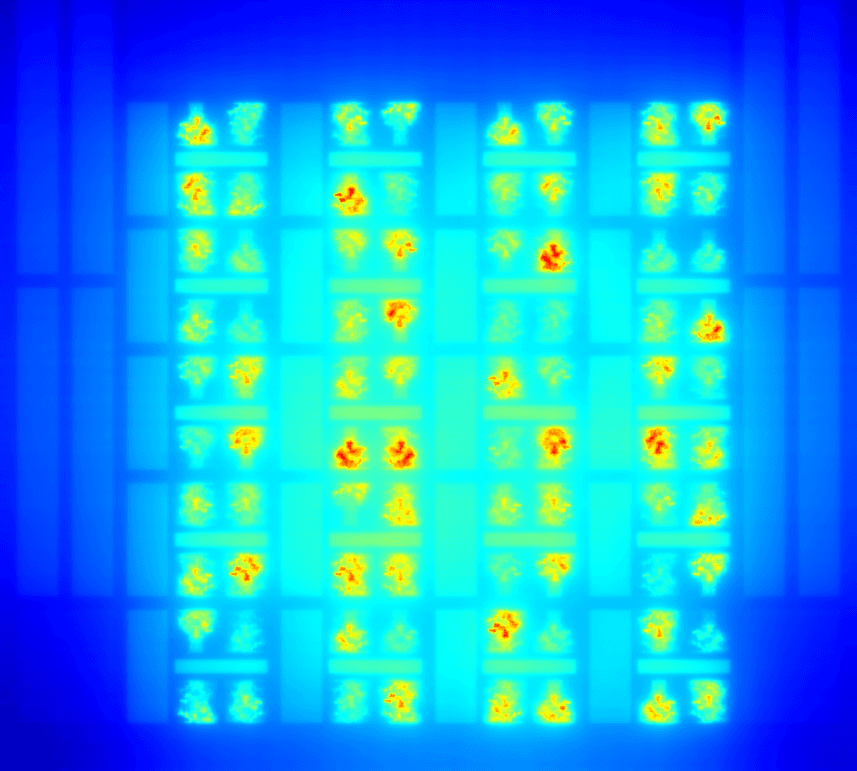

Most R&D today is focused on the fusion of video and radar images for automotive applications, traffic monitoring and smart manufacturing. The cooperative fusion process, however, is generic. This means you can apply it to a wide range of solutions incorporating also other sensors such as thermal cameras and lidar.

With this in mind, we’d love to discuss your challenge and use cases.